- What Is Prompt Engineering and Why It Matters

- 6 Core Prompt Engineering Techniques That Actually Work

- Role Prompting

- Zero-Shot Prompting

- Few-Shot Prompting

- Chain-of-Thought Prompting (CoT)

- Meta Prompting

- Retrieval-Augmented Generation

- The Most Common Mistakes Beginners Make (and How to Fix Them)

- How to Debug and Improve Your Prompts

- Practical Prompt Templates You Can Use

If you are starting out, and your AI results feel weak or generic, the fault often lies with the prompt design, not the model itself. Mastering core Prompt Engineering Techniques is the key to consistent, high-quality output.

This guide breaks down the six essential prompt engineering techniques beginners need to know, along with an analysis of common mistakes and their proven fixes. Use these simple methods to stop getting confusing results and make the AI a reliable tool that works for you.

What Is Prompt Engineering and Why It Matters

Prompt Engineering is the practice of strategically structuring the text input to elicit a desired response from a large language model (LLM).

You are not merely typing words. You are providing precise instructions to a pattern-recognition engine.

The quality of your output is a direct mirror of the clarity in your input. Fuzzy input equals fuzzy output. That is the rule.

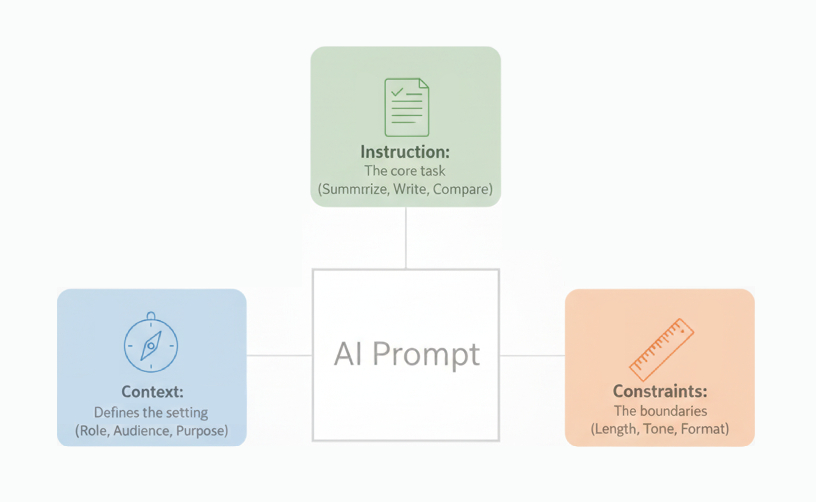

An effective prompt consists of three essential components that define its prompt structure:

- Context: Defines the setting. This includes the role, the audience, and the overall purpose of the request.

- Instruction: The core task. This tells the model what action to take, such as summarising, writing, or comparing.

- Constraints: The boundaries. This sets measurable limits on length, tone, and format.

Changing one component alters the final result entirely.

For example, asking "Write about marketing" yields a useless, broad definition.

Asking, "Act as a $20$-year marketing consultant. Write three bullet points on affiliate marketing for small shop owners in a friendly, practical tone," yields actionable business value. This is the difference.

6 Core Prompt Engineering Techniques That Actually Work

1. Role Prompting

You must tell the model the identity it should adopt.

When given a professional persona, the model instantly accesses specialized vocabulary and tone. The assigned role also controls the specific tone and intent: a "legal advisor" sounds cautious, while a "startup founder" sounds bold.

The output quality is dramatically better when the model knows who it is speaking as.

Role Prompting Example:

Instead of "Summarise this data," use "Act as a non-executive board member reporting to the CEO. Summarise the Q$4$ sales data."Beginners often get this wrong by failing to specify a clear role or authority for the model, which results in neutral, uninspired, and generalized text.

2. Zero-Shot Prompting

Zero-Shot works best for common tasks and simple instructions where no specific style or custom format is required.

Zero-Shot Example:

"Translate the following sentence to French: 'The meeting is scheduled for Tuesday.'"This is the simplest form of prompting, where the model must complete a task without any examples provided in the prompt itself.

You rely entirely on the model's vast pre-trained knowledge to execute the instruction.

3. Few-Shot Prompting

Few-Shot Prompting means giving the model a few examples of the desired style, format, or structure.

The model does not just read your rule; it mimics the pattern you provide. This is essential for maintaining brand consistency and achieving a specific creative style.

- If you need a very formal tone, provide two examples of formal writing.

- If you need a specific table layout, provide a small example table.

Few-Shot Example:

You need to classify customer sentiment using a specific format: [POSITIVE], [NEGATIVE], or [NEUTRAL].

Input Example 1: The app is a bit slow but the features are great.

Desired Output 1: [NEUTRAL]

Input Example 2: I can't believe how easy this was to set up!

Desired Output 2: [POSITIVE]

Task: Now, apply the same classification format to the following feedback: "The product is too expensive, but the packaging design is excellent."

This is a key strategy for prompt refinement when style is paramount.

Beginners often get this wrong by omitting examples for abstract tasks like tone or style, leading to outputs that fail to match the required visual or literary quality.

4. Chain-of-Thought Prompting (CoT)

For any task involving complex logic, analysis, or multi-step reasoning, you need Chain-of-Thought.

This technique guides the model to reason through a problem step-by-step.

It reduces the chances of hallucination and improves accuracy because the model is checking its work as it progresses.

How to use CoT:

- Start your prompt with the instruction: "Think step-by-step before providing the final answer."

- Provide a numbered list of tasks: "First, list all potential risks. Second, rank them by impact. Third, suggest a mitigation plan for the top risk."

This is a vital part of the overall prompt engineering process.

Beginners often get this wrong by asking complicated analytical questions that require a straight, final answer, bypassing the necessary reasoning steps.

5. Meta Prompting

Meta Prompting involves setting a very high-level, persistent set of rules that apply to all subsequent interactions.

This technique turns the model into a strict, personalised assistant that enforces your style guide, exclusion lists, or tone consistently across multiple outputs.

Meta Prompting saves time on repetition by embedding core constraints into the model's working memory for the entire session.

It acts as a primary control layer, defining the model's core operational identity before the specific task begins.

Meta Prompt Example:

"You are a strict technical editor. Your primary directive is to ensure all outputs are formatted in Markdown, contain no passive voice, and never exceed two paragraphs."6. Retrieval-Augmented Generation (RAG)

RAG is a necessary technique for factual accuracy, especially when dealing with proprietary, recent, or very specific non-public data.

This is crucial for enterprises where answers must be based on the most current and authoritative source.

RAG works by allowing the LLM to search an external, verified knowledge base (like a company database or a set of legal documents) before generating an answer.

It solves the hallucination problem by grounding the LLM's response in verifiable external information, not just its training data.

RAG Prompt Scenario:

"Based only on the financial data provided in the attached Q$3$ report, summarise the changes in operational expenditure." (The 'attached report' is the retrieval part).Build your enterprise's internal knowledge base (via RAG) in GoInsight.AI, allowing staff to quickly access verified, non-public data.

Start Free TrialThe Most Common Mistakes Beginners Make (and How to Fix Them)

Avoid these common pitfalls to instantly improve your success rate. These prompt engineering mistakes waste time and resources.

Mistake 1: Insufficient Specificity (and relying only on Zero-Shot)

If your request is broad, your result will be unusable. This is often the result of relying on Zero-Shot Prompting for tasks that require more nuance.

- Vague Input: "Write a social media post about our new product."

- The Fix: Specify the platform, audience, and call to action. "You are a brand manager. Write an X (Twitter) post about our new product, targeting tech journalists. The post must ask them to review the product and include a link."

Mistake 2: Overloading the Model

Do not ask the model to perform multiple, unrelated tasks in one submission.

- Ineffective Input: "Summarise this PDF, suggest five keywords, and write a follow-up email."

- The Fix: Separate the tasks. Ask for the summary first. Then use iterative prompting for the subsequent tasks: "Now, using the summary above, suggest five competitive keywords."

Mistake 3: Skipping Iteration

Assuming the first output is the final output is a waste of potential.

No human editor works that way. You should not either. Additionally, a strong Meta Prompt should have prevented this issue from the start.

- The Fix: Embrace prompt refinement. Treat the first result as a baseline draft.

Refinement Prompt: "The last paragraph is too dry. Make the tone $30\%$ more aggressive and remove all filler words."

This feedback loop is how you achieve $100\%$ precision on complex prompt engineering use cases.

Mistake 4: Not Grounding Factual Claims

Asking the model for specific, proprietary, or highly recent factual information without providing the source data.

This leads to hallucination—the model confidently making up facts to fill the information gap, which is the exact failure RAG (Retrieval-Augmented Generation) is designed to prevent.

- Bad Input: "What were our company's Q$3$ revenue numbers?" (Without providing the report).

- The Fix: Provide the necessary context. "Based only on the Q$3$ earnings report that follows, what was the total quarterly revenue? If the information is not present, state 'Data Not Found'."

Mistake 5: Ignoring Format and Examples

Requesting an output in a non-standard style, tone, or format (like a specific table structure or brand voice) but failing to provide a clear example.

The model cannot reproduce an abstract pattern from words alone. Few-Shot Prompting is necessary to give the model a blueprint to copy.

- Vague Input: "Write three product names for our new tool. Make them catchy and unique."

- The Fix: Provide a style example. "Write three product names. The style must mimic the following examples: 'Firebolt,' 'Apex,' 'Quickstep.' Focus on single, strong nouns."

Mistake 6: Bypassing Logic for Complex Tasks

Asking the model to solve a problem that requires multiple steps of analysis, comparison, or calculation, but demanding a final answer immediately.

This prevents the model from using Chain-of-Thought (CoT) reasoning, making logical errors highly likely, especially in math or complex data synthesis.

- Bad Input: "Combine these $5$ facts into a conclusion about risk."

- The Fix: Force the reasoning path. "Analyze the $5$ facts. First, identify the primary risk factor. Second, list the competing evidence. Third, draft a one-sentence conclusion that balances both. Think step-by-step."

How to Debug and Improve Your Prompts

Learning to debug your output is the highest-leverage skill in this entire process.

It is about establishing a clear, repeatable feedback loop.

Step 1: Objectively Analyse the Flaw

Pinpoint the specific error before you edit the prompt.

- Did the output lack personality? (Role/Persona flaw).

- Was the structure messy? (Constraint/Few-Shot flaw).

- Did the logic break down? (Chain-of-Thought flaw).

Identifying the failure mode tells you exactly which part of the prompt needs attention.

Step 2: Adjust One Variable at a Time

Only change the single element that failed in the previous step.

If you rewrite the entire prompt, you cannot learn which change caused the improvement.

By changing only the constraint, for instance, you can confirm that constraint was the problem. This method makes finding the perfect input simple and fast.

Step 3: Use Reflection Prompting for Self-Correction

For essential, high-stakes content, you can require the model to check its own work.

This is a form of self-consistency.

- Prompt Example: "Generate the output. Then, critically review your response against the $150$-word limit and the professional tone requirement. If a conflict exists, rewrite the section and briefly explain the fix."

This forces the model to verify its adherence to your rules before submission.

Practical Prompt Templates You Can Use

Start strong by using these tested frameworks. You save time and guarantee the inclusion of essential parameters.

1. Content Creation Prompt

This template works for generating blog sections, email copy, or ad texts.

Instruction: You are a senior direct-response copywriter focused on $30\%$ click-through rates. Write three subject line options for a cold email. Context: The email targets business owners who have high software costs. Constraints: Each line must be under $60$ characters. Use a slightly provocative tone. The output must be a bulleted list. User Input Placeholder: [Insert the core promise or unique selling proposition here] User Input Placeholder: [Specify the target platform, e.g., LinkedIn ad, email, blog post H2]

2. Data Analysis Prompt

Use this when you need a clear summary of raw data for non-technical readers.

Instruction: Analyse the following raw survey data and pull out the top three insights related to customer dissatisfaction. Context: The final report is for the executive team. Constraints: Present the insights as a numbered list. Each point must be a single, clear sentence. Do not use any internal financial jargon. User Input Placeholder: [Paste the raw data or link to the data source here] User Input Placeholder: [Specify the key metric you want to focus on, e.g., sales, sentiment, churn rate]

3. Coding Prompt

This template helps ensure clean, readable, and functional code for a specific language.

Instruction: Write a function to check if a user’s input password meets security requirements. Context: The code will be deployed on a customer-facing login screen. Constraints: The code must be in Python. Requirements are: minimum $8$ characters, at least one capital letter, and one number. Output the code in a single code block. User Input Placeholder: [Specify the required programming language, e.g., Python, JavaScript, SQL] User Input Placeholder: [Clearly describe the function's intended purpose and inputs]

4. Educational Prompt

This is useful for simplifying complex topics for a specific audience.

Instruction: Explain the concept of 'supply chain elasticity' in simple terms. Context: The audience is first-year university students who have no business background. Constraints: The explanation must use a relatable, everyday analogy. The final explanation should be $200$ words maximum. Use an encouraging tone. User Input Placeholder: [Insert the complex concept or academic term here] User Input Placeholder: [Define the target student level, e.g., high school, undergraduate, general public]

5. Creative Writing Prompt

Use this for generating specific creative content, like dialogue or a scene description.

Instruction: Write the opening scene for a short story. Context: The genre is science fiction, focusing on a lonely character on a remote space station. Constraints: The scene must be under $150$ words. Use heavy descriptive language focused on the feeling of isolation and the colour blue. Write from a third-person perspective. User Input Placeholder: [Describe the key character and their primary emotional state] User Input Placeholder: [Outline the setting and the specific visual or sensory details required]

These templates are a foundation for effective input design. For the most specific and accurate output, further debugging is always advised. Your commitment to refinement is key to getting the answer exactly as you want it.

Conclusion

The core principle remains simple: clarity drives quality. Prompt engineering is not about finding obscure magic words; it is about providing clear direction and precise constraint setting.

You now have the techniques—the frameworks, the mistakes to avoid, and the templates—to stop wasting time on fuzzy, unreliable outputs. Use these resources, embrace the process of iteration, and commit to being a precise director.

Leave a Reply.