Have you ever wanted to experiment with powerful AI models without worrying about privacy or cloud costs? Running a Large Language Model (LLM) on your own device might be the perfect solution.

In this guide, we’ll walk you through what a local LLM is, what you need to run one, and how to get started—plus some tools and model recommendations to make the journey easier.

What is a local LLM?

A Local LLM (Large Language Model) is an AI model that runs entirely on your own device—whether it’s a laptop, desktop, or private server—without relying on the internet or cloud services.

Most people are familiar with cloud-based AI tools like ChatGPT, where your input is sent to servers run by companies like OpenAI or Google. These are often called online LLMs, and while they’re powerful and convenient, they also raise concerns about privacy, cost, and control.

That’s where local LLMs come in. By running the model on your own hardware, you can keep your data private, avoid API fees, and experiment freely.

Local LLM vs Online LLM

| Local LLM | Online LLM | |

|---|---|---|

| Runs on | Your own hardware | Cloud servers |

| Internet needed | | |

| Data privacy | High (data stays local) | Depends on provider |

| Cost | One-time setup (free to run) | Ongoing API or subscription fees |

| Model size | Smaller models (7B–13B) | Access to large-scale models |

Local LLMs are a great way to explore AI hands-on, especially if you care about privacy or want more control. Let’s look at what it takes to get one running.

What You Need to Run a Local LLM?

Before you dive into running a local LLM, it’s worth understanding what’s actually involved. Unlike chatting with an online AI, running a model locally means you’re responsible for the computing power, the tools, and the model itself.

Let’s break down what that means.

Hardware Requirements

Running a large language model might sound intimidating, but you don’t necessarily need a high-end server or fancy GPU. A modern laptop with at least 8GB of RAM can run small models—though for smoother performance, 16GB+ RAM and a decent CPU or GPU will go a long way.

Software Tools

You’ll also need some software to actually load the model and let you interact with it. These tools act as a local engine, turning raw model files into a chat interface, an API endpoint, or even a browser UI.

There are many tools available, ranging from simple command-line apps to full graphical interfaces. When choosing one, think about:

● Your comfort level with technical setup

● Whether you prefer a visual interface or terminal

● What operating system you're using

LLM Models

The “LLM” part refers to the model itself—the brain behind the operation. These models come in different sizes and strengths, and what you choose depends on your hardware and goals.

Some models are better at general conversation, others at writing code or summarizing documents. Smaller models run faster and use less memory, but may offer simpler responses. Larger models can be more capable, but they’ll need more resources to run smoothly.

How to Run LLM Locally: Step-by-Step Guide

Now that you know what’s needed, let’s actually run a local LLM. We’ll use Ollama, a lightweight tool that makes running models as easy as typing a command.

Whether you're on Windows, macOS, or Linux, the steps are largely the same.

Step 1: Download a Local LLM Tool

Head over to ollama.com and download the version for your system. The installer is straightforward—just follow the prompts like any regular app install.

Once it’s done, you’ll be able to use the ollama command from your terminal.

Step 2: Run Your First Model

Open your terminal (Command Prompt, Terminal, or PowerShell), and enter the following:

ollama run llama3

This command tells Ollama to download and start the LLaMA 3 model, a powerful general-purpose model by Meta. The first time you run it, Ollama will automatically download the necessary files (this may take a few minutes depending on your internet speed).

Once the model loads, you’ll be dropped into a simple chat interface. Try typing a question:

> What's the capital of Japan? Tokyo.

Just like that, you’re chatting with a powerful AI model—running entirely on your machine!

Step 3: Connect to Other Apps (Optional)

Once you’re comfortable, you can go further:

● Use Ollama’s local API to build apps or connect with other tools

● Pair it with a browser-based UI (like Open WebUI or Chatbot UI)

● Integrate it into automation platforms like GoInsight.AI for serious workflows

Running a local LLM might sound technical at first, but tools like Ollama make it surprisingly accessible—even fun.

Best Open Source Models to Try

With so many open-source LLMs available, choosing the right one can be tricky. The “best” model really depends on your use case. Here's a breakdown by category to help you get started.

General-Purpose Chat Models

LLaMA 3 (Meta): Arguably the most advanced open-source model today. LLaMA 3 comes in multiple sizes (8B, 70B) and delivers impressive language understanding and generation quality. Great for general-purpose tasks.

Mistral 7B: Small but mighty. Mistral is fast and efficient, often outperforming larger models in benchmarks. A good choice if you're looking for speed without sacrificing too much intelligence.

Gemma (Google): A newer entry from Google, tuned for safety and helpfulness. Performs well in dialogue scenarios and integrates easily with Ollama and other tools.

Code Generation Models

Code LLaMA: A variant of LLaMA fine-tuned on code. Supports multiple languages and works great for writing or reviewing code snippets.

Deepseek-Coder: Known for strong performance in both code generation and reasoning tasks. Especially good at understanding complex logic.

StarCoder2: Trained on permissively licensed codebases, StarCoder2 is optimized for developer tasks and integrates well with IDEs.

Lightweight Models

Phi-2 (Microsoft): Tiny and efficient, Phi-2 runs smoothly even on CPUs. Ideal for personal projects or learning environments.

TinyLLaMA: A scaled-down version of LLaMA that can run on older machines. It’s not the smartest, but it’s incredibly accessible.

Qwen 1.5 1.8B: From Alibaba, this compact model supports both English and Chinese and offers solid multilingual capabilities with minimal resource demands.

Top Tools to Run Local LLMs

Once you’ve picked a model, the next step is choosing the right tool to run it. These tools help you download, run, and interact with LLMs locally—often with just a few commands. Here are some of the most popular and beginner-friendly options:

1. Ollama

Best for: Beginners who want a simple, no-fuss setup

Ollama makes running LLMs locally incredibly easy. You can download and run models with a single terminal command (like ollama run llama3). It supports popular open-source models like LLaMA 3, Mistral, and Gemma, and handles GPU/CPU compatibility under the hood.

2. LM Studio

Best for: Users who prefer a desktop interface over the command line

LM Studio wraps local LLMs in a clean, user-friendly GUI. You can chat with models directly, manage downloads, and tweak settings—without ever opening a terminal.

3. text-generation-webui

Best for: Tinkerers and advanced users who want full control

text-generation-webui, this open-source web UI is a favorite in the LLM community. It supports a wide variety of models and formats (GGUF, GPTQ, etc.), and offers advanced options like prompt templates, LoRA fine-tuning, and plugin support.

4. Open WebUI

Best for: Teams or collaborative environments

Open WebUI offers a collaborative web-based interface that’s perfect for sharing access to local LLMs within a small team. It integrates well with models hosted via Ollama or text-generation-webui.

5. llama.cpp

Best for: Developers looking for maximum portability

llama.cpp is the C++ engine behind many lightweight LLM apps. It enables running quantized models on CPUs—even on older laptops or Raspberry Pi devices. Not the most user-friendly tool on its own, but incredibly efficient and widely used under the hood.

From Local LLM to Production AI: Try GoInsight.AI

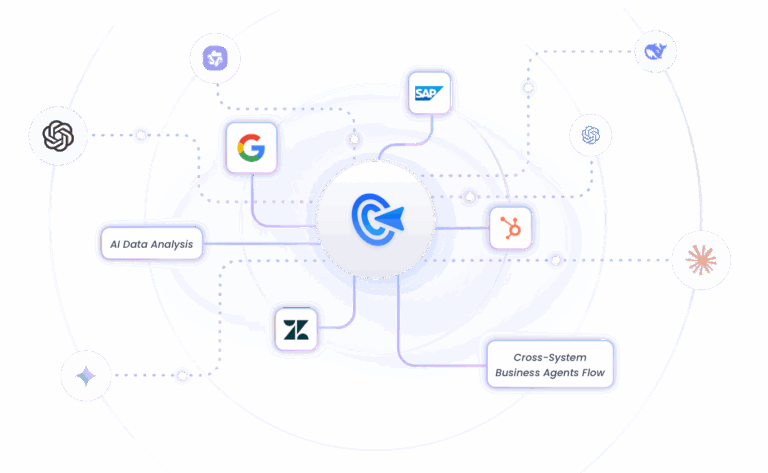

By now, you’ve seen how easy it is to run a local LLM and chat with it using tools like Ollama. But what if you want to go further—say, automate tasks, build custom AI agents, or connect your model with APIs, databases, or even other tools?

That’s where GoInsight.AI comes in.

GoInsight.AI is a powerful platform for building Agentic Workflows—AI-powered automations that not only understand natural language, but can also act on it. It’s designed for both technical and non-technical users, offering:

- Visual Workflow Builder: Easily connect LLMs, APIs, tools, and logic—all with a drag-and-drop interface.

- Multi-Agent Collaboration: Create workflows involving multiple agents that communicate and work together.

- Knowledge Integration: Feed your agents with custom knowledge and contextual memory using RAG.

- Enterprise-Grade Security: Fine-grained permissions, audit logs, and compliance features come built-in.

Whether you're automating internal workflows or building an AI-powered app on top of your local model, GoInsight.AI helps you bridge the gap between “just chatting” and real-world AI execution.

Final Words

Running a local LLM opens up exciting possibilities—from privacy and cost savings to hands-on learning. With the right hardware, tools, and models, you can get started quickly. And if you want to unlock even more power, platforms like GoInsight.AI let you build intelligent workflows that truly bring AI to life. Now it’s your turn to explore and create!

Leave a Reply.