LLMs have become powerful tools for answering questions, creating content, and assisting with daily tasks. Yet they can sometimes produce information that sounds correct but is actually wrong, a problem known as hallucination. This challenge led to the development of Retrieval-Augmented Generation, or RAG, as a way to make AI responses more reliable.

What Is RAG in AI?

RAG is a technique or solution that enhances the LLM capability by combining it with a retrieval system. Put simply, RAG enables the AI model to look up relevant information from a connected knowledge base before generating an answer. It makes sure that the information is taken from a correct base and answered properly.

Let's take an example to understand better. Think of an LLM as a smart and brilliant student with an excellent memory, but whose knowledge is frozen at the time they finish their last course, and only the knowledge of the model is fixed with the training data it was trained on. Without updates or any upcoming knowledge and context, that student may forget new developments or miss details outside their training. RAG works like giving that student access to a constantly updated library. Now, whenever asked a question, the student not only relies on memory but also checks the library for fresh, specific, and reliable information before responding.

Why Use RAG?

While generic LLMs are also impressive with their answers and may provide good answers as well, they do have inherent limitations: their knowledge is static and not specific to the context or the knowledge that you may require from it. They may hallucinate facts and provide inaccurate responses, and they often lack access to domain-specific or enterprise-only information. RAG helps overcome these challenges.

Here are the key benefits of RAG:

- Reduces hallucinations: By grounding answers in retrieved documents and the knowledge base, RAG lessens the chances of AI inventing information by itself.

- Incorporates current data: Instead of relying on outdated training data or the training itself for the responses, RAG fetches the most recent facts, policies, or market updates from the knowledge base it has been provided.

- Adds domain-specific knowledge: Enterprises can integrate their AI models into internal manuals, policies, compliance documents, or customer FAQs into the retrieval system, making the response highly business-relevant.

- Cites sources: RAG also provides references alongside answers, improving transparency and trust.

- Cost-effective: Rather than fine-tuning a model repeatedly, enterprises can simply update their knowledge base and get instant improvements.

For businesses, this is very valuable. Teams can standardise and train how their AI systems respond to queries, reduce the trial-and-error of prompting, and ensure employees and customers receive consistent, reliable answers grounded in enterprise knowledge.

How Does Retrieval-Augmented Generation Work?

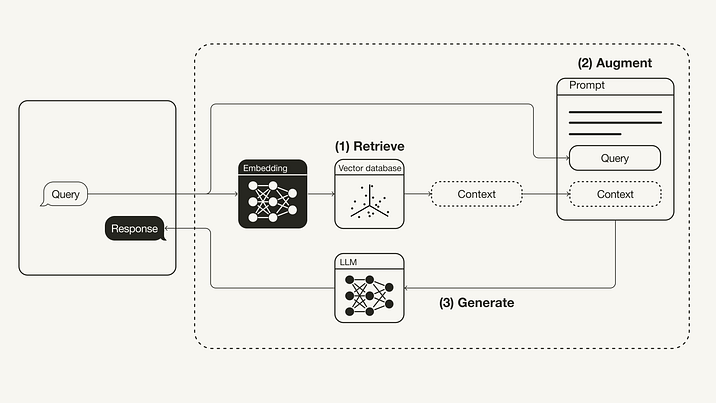

At its core, RAG follows a simple loop:

The loop is: User → Retrieval → LLM → Answer

1. Knowledge preparation: Enterprise documents (policies, manuals, reports, etc.) are stored in a vector database. Text is converted into numerical representations (“embeddings”) so the system can search by meaning, not just keywords.

2. Retrieval: When a user (employee, customer, etc) asks a question, the system searches the database to find the most relevant document segments, ensuring the LLM has access to precise and up-to-date information.

3. Augmentation: These retrieved snippets provide context to the LLM, grounding its responses in real, authoritative information and reducing the risk of hallucinations.

4. Generation: The LLM combines its internal knowledge with the retrieved content to produce a well-informed, context-aware answer.

This workflow shows how the steps of retrieval, augmentation, and generation work together to make the model use the most relevant information. The process focuses on providing context before producing an answer, keeping the system clear and structured when handling user questions.

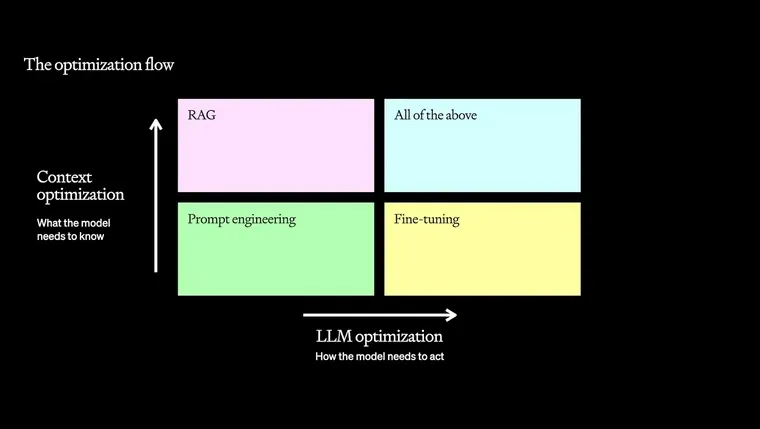

RAG vs Fine-tuning

Fine-tuning is another approach enterprises use to adapt LLMs. In the approach of fine-tuning, the model is retrained with new examples so it learns domain-specific language or preferred answer styles. Although it seems good and powerful, it often comes with higher costs and limited flexibility compared to RAG.

Here’s a quick comparison:

| Dimensions | RAG | Fine-tuning |

|---|---|---|

| Implementation approach | Retrieves external knowledge at query time | Modifies the model’s weights with new training data |

| Flexibility of data updates | Instantly update by changing documents in the knowledge base | Requires re-training whenever new data is added |

| Cost/deployment difficulty | Lower—no need for large-scale retraining | Higher—compute-intensive and complex deployment |

| Answer accuracy/style control | Highly accurate for factual queries, with less stylistic control | More control over tone, format, and domain-specific language |

| Suitable use cases | Enterprise Q&A, compliance, customer support, and real-time data access | Brand voice customisation, creative writing, domain-specific styles |

The decision between RAG and fine-tuning largely depends on the goal. RAG is the better choice when accuracy, freshness, and seamless integration with enterprise knowledge are the top priorities. Because it continuously retrieves the latest and most relevant information from official sources, it ensures that responses remain up to date and grounded in truth, making it particularly valuable in areas like compliance, customer support, or rapidly changing domains.

RAG Use Cases

RAG has practical applications across multiple industries, especially where accuracy and up-to-date knowledge are critical. Some examples include:

Enterprise knowledge base Q&A

Employees often waste hours browsing through long documents, outdated wikis, or internal portals to find simple answers. They can query company policies, IT guidelines, or HR rules and receive direct answers supported by official documents. They do not have to search for any documentation for their needed answers. This not only improves productivity but also ensures consistency.

Customer support

Traditional customer support requires teams of representatives answering repetitive questions around product manuals, service details, or warranty information. AI assistants can use product manuals and FAQ databases to respond accurately to customer queries, reducing support costs. This reduces support costs and response time, while allowing human agents to focus on more complex issues rather than routine inquiries.

Legal, compliance, and healthcare

Fields that require high precision benefit greatly from RAG, as it ensures that answers come from validated and trustworthy sources. This reduces the risk of errors in situations where mistakes could have serious consequences. For example, a law firm could use RAG to instantly retrieve relevant clauses from case law databases, while a healthcare provider could ensure AI-powered patient queries are answered based only on approved medical guidelines.

Real-time information queries

From financial markets to news monitoring, RAG enables AI to access the latest updates that static LLMs would otherwise miss. For example, a financial analyst could use RAG to instantly retrieve the most recent stock performance or market reports, ensuring insights and decisions are based on current and relevant data.

GoInsight.AI: Enterprise-Ready RAG + Knowledge Base Solution

While the value of RAG is clear, building such a system from the start is not easy and is often regarded as complex. For this, Enterprise need to manage document ingestion, vector databases, AI integration, compliance, and workflow orchestration.

This is where GoInsight.AI comes in. GoInsight.AI is a ready-made platform for enterprises to simplify and accelerate the adoption of RAG-powered AI solutions. Instead of piecing together a tech stack, organisations can make use of GoInsight.AI’s integrated features to deploy knowledge-enhanced AI applications securely and efficiently.

Key Features:

- Knowledge Base Integration: Through Golnsight, you can easily import and organise enterprise documents.

- Built-in RAG Support: Native retrieval-augmented generation for accurate & specific answers.

- Visual Workflow Engine: You can design AI workflows without complex coding or training.

- Multi-Model Flexibility: Choose and combine different LLMs based on your requirements.

- Security & Compliance: Ensure AI outputs meet enterprise and regulatory standards.

In short, GoInsight.AI unlocks the promise of RAG without the complexity of building it from scratch—making enterprise AI both faster to adopt and safer to scale.

Conclusion

RAG marks an important step toward making AI truly dependable. Its value goes beyond reducing errors or providing accurate answers; it represents a shift in how knowledge is accessed and applied, changing the way people work, collaborate, and innovate. As AI becomes central to business and society, adopting RAG will be key to building systems that are not only smart but also trustworthy. With GoInsight.AI, organizations can take this step with ease, transforming reliable AI into real and lasting impact.

Leave a Reply.