- What is Chain of Thought Prompting?

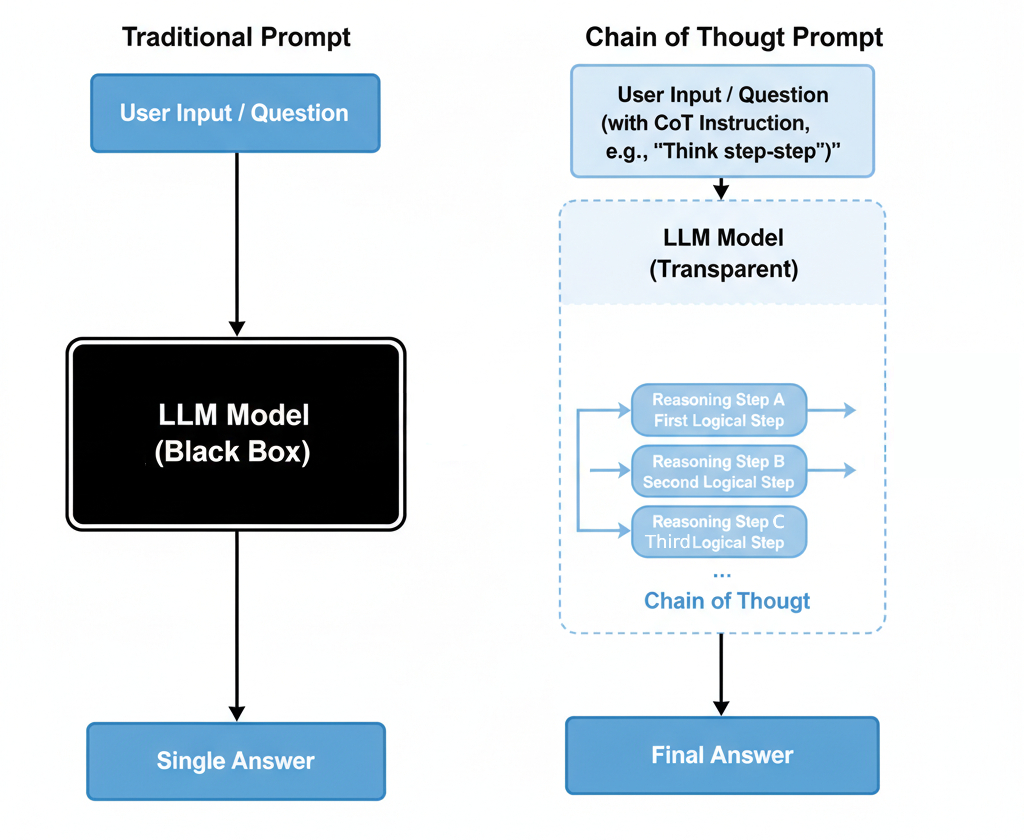

- Direct vs. Step-by-Step CoT Prompting: A Comparison

- The CoT Workflow

- How to Use Chain of Thought Prompting

- The Zero-Shot Method

- The Few-Shot Method

- Practical Applications & Use Cases

- Complex Math and Logic Problems

- Structured Content Generation

- Programming and Code Development

- Advanced CoT Methods and Future Developments

- Limitations and Considerations

- Best Practices for Effective CoT

- Pro Tip: Engineering CoT into Scalable Workflows with GoInsight

Have you ever wondered why LLMs can handle diverse, challenging tasks and provide effective solutions?

To get better performance from them, we can use techniques known as 'prompt engineering'. One of the most valuable methods is Chain of Thought (CoT) prompting, where the AI shows its reasoning process, not just the final answer.

Today, we're exploring Chain of Thought prompting - what it is, how to use it, where it excels, and its limitations.

What is Chain of Thought Prompting?

Chain of Thought prompting is a method for interacting with an LLM that encourages the model to explain its reasoning step-by-step before generating an answer.

Rather than receiving a single answer response, you get to see the AI's thinking process in a logical sequence.

Typically, LLMs operate like a 'black box'—you ask a question and get an answer, but you don't know how it arrived at that conclusion. CoT prompting opens up this process, giving you a clear view into the model's reasoning.

The model narrates the steps it took to arrive at the answer, making the process more transparent and allowing you to identify and correct potential errors in the model's logic.

Direct vs. Step-by-Step CoT Prompting: A Comparison

Let's use a simple math question to illustrate the difference:

Question: If a train travels 60 miles in 1.5 hours, what is its average speed?

Direct Answer: "40 mph"

Step-by-step (CoT) Answer:

Distance = 60 miles

Time = 1.5 hours

Speed = Distance ÷ Time = 60 ÷ 1.5

60 ÷ 1.5 = 40

Final Answer: 40 mph

For simple problems, direct answers often suffice. However, for complex, multi-step reasoning tasks, CoT makes it much easier to identify errors in the AI's logic and provides clear points where you can intervene with corrections.

The CoT Workflow

CoT follows a structured three-phase process:

- Problem Deconstruction: The AI breaks down complex problems into smaller, manageable components

- Logical Reasoning: The model builds connections between steps, ensuring each step flows logically to the next

- Synthesis & Verification: Finally, it combines results from each step to produce a verifiable final answer

This approach enables LLMs to demonstrate human-like reasoning patterns, making their outputs more intuitive and trustworthy.

How to Use Chain of Thought Prompting

The Zero-Shot Method

Zero-shot CoT requires no examples—you simply activate the model's reasoning with trigger phrases. After posing your question, add prompts like:

"Let's think step by step." "Break this down into steps." "Show your reasoning."

Example:

Prompt: Adam's mother baked cookies and gave him 10. Adam went to school and shared them with his friends. He gave 3 cookies to Michael and 3 cookies to Sarah. Adam went back home and wanted more cookies, so his mother gave him 5 more. How many cookies does Adam have?

Let's think step by step.

Response:

Adam starts with 10 cookies

He gives away 3 to Michael and 3 to Sarah → 10 − 3 − 3 = 4

His mother gives him 5 more → 4 + 5 = 9

Answer: 9 cookies

Zero-shot CoT works excellently for quick calculations, logic puzzles, and straightforward reasoning tasks.

The Few-Shot Method

Few-shot CoT involves providing 2-3 example problems with complete reasoning before asking your actual question. This "teaches" the model your preferred reasoning format.

Template:

Provide 2 – 3 relevant examples before your real question.

• Problem 1 → Reasoning → Answer

• Problem 2 → Reasoning → Answer

• Problem 3 → Reasoning → Answer

Ask your real question.

Example:

If a rectangle has sides 4 and 5, its area is 4 × 5 = 20

If a rectangle has sides 3 and 6, its area is 3 × 6 = 18

If a rectangle has sides 9 and 6, its area is 9 × 6 = 54

Your Question: If a rectangle has sides 20 and 31, what is its area?

AI Response:

Following the pattern:

Area of rectangle = length × width

Here, the sides are 20 and 31

Multiply: 20 × 31 = 620

Answer: 620

Few-shot CoT excels for complex problems requiring specific reasoning patterns, such as multi-step mathematics, structured writing tasks, or coding challenges.

Practical Applications & Use Cases

Complex Math and Logic Problems

Mathematical word problems often require tracking multiple variables and operations in sequence. CoT prompting guides the model to carefully map out its reasoning path, significantly improving accuracy.

Example Prompt: A bookstore offers a 20% discount on a $50 book. A customer has a coupon for an additional $5 off, applied after the discount. If they buy 3 books, what's the total cost? Show your steps.

AI Response:

Step 1: Base price per book = $50

Step 2: Apply 20% discount → $50 - $10 = $40

Step 3: Apply $5 coupon → $40 - $5 = $35

Step 4: Price per book = $35. For 3 books → 3 × $35 = $105

Total: $105

Structured Content Generation

AI often struggles with organization when writing reports or summaries. CoT prompting addresses this by first creating a logical structure, then developing each section systematically.

Example Prompt: Write an outline for a blog post on remote work productivity. First identify key categories, then subtopics, then arrange them logically.

AI Response:

Introduction — why remote productivity matters

Mindset & Foundations — autonomy, goal-setting, boundaries

Workspace & Tools — ergonomic setup, essential applications

Communication — asynchronous vs. synchronous, clear handoffs

Time Management — time-blocking, priority setting

Well-being — breaks, routines, social connection

Conclusion — key takeaways and actionable next steps

Programming and Code Development

For coding tasks, CoT prompting encourages planning before implementation, resulting in cleaner, more maintainable code.

Example Prompt: Write a Python function that reads a text file and counts word frequency. First explain your approach, then provide the code.

AI Response:

Approach:

Step 1: Open file in read mode with error handling

Step 2: Read and normalize text (lowercase, remove punctuation)

Step 3: Split text into individual words

Step 4: Use dictionary or Counter to track word frequencies

Step 5: Return the frequency dictionary

Code: [Would then provide the actual implementation]

Advanced CoT Methods and Future Developments

Emerging Variations

- Tree of Thoughts (ToT): Unlike linear CoT reasoning, ToT generates multiple branching possibilities at each step, allowing the AI to explore different solution paths and select optimal routes.

- Self-Correction Prompting: Adding reflection prompts like "Review your reasoning above. Are there any mistakes?" encourages the model to verify its logic before finalizing answers.

Integration with External Tools

Researchers are developing hybrid approaches that combine CoT reasoning with external resources—databases, calculators, search engines—to enhance accuracy while maintaining transparency in the reasoning process.

Limitations and Considerations

Cost and Efficiency

CoT generates longer outputs, consuming more tokens and computational resources. This can become expensive for large-scale applications or frequent use.

Hallucination Risk

Step-by-step reasoning doesn't eliminate hallucinations—it can sometimes make false logic appear more convincing. Always verify critical reasoning steps manually.

Model Requirements

Not all AI models handle CoT effectively. Reliable CoT performance requires specialized training on reasoning-focused datasets.

Best Practices for Effective CoT

• Use CoT when accuracy matters more than brevity

• Provide detailed, specific prompts with relevant context

• Include background information, sources, and constraints

• Manually verify critical reasoning steps

• Combine CoT with external fact-checking tools when possible

• Reserve CoT for complex tasks that genuinely benefit from step-by-step reasoning

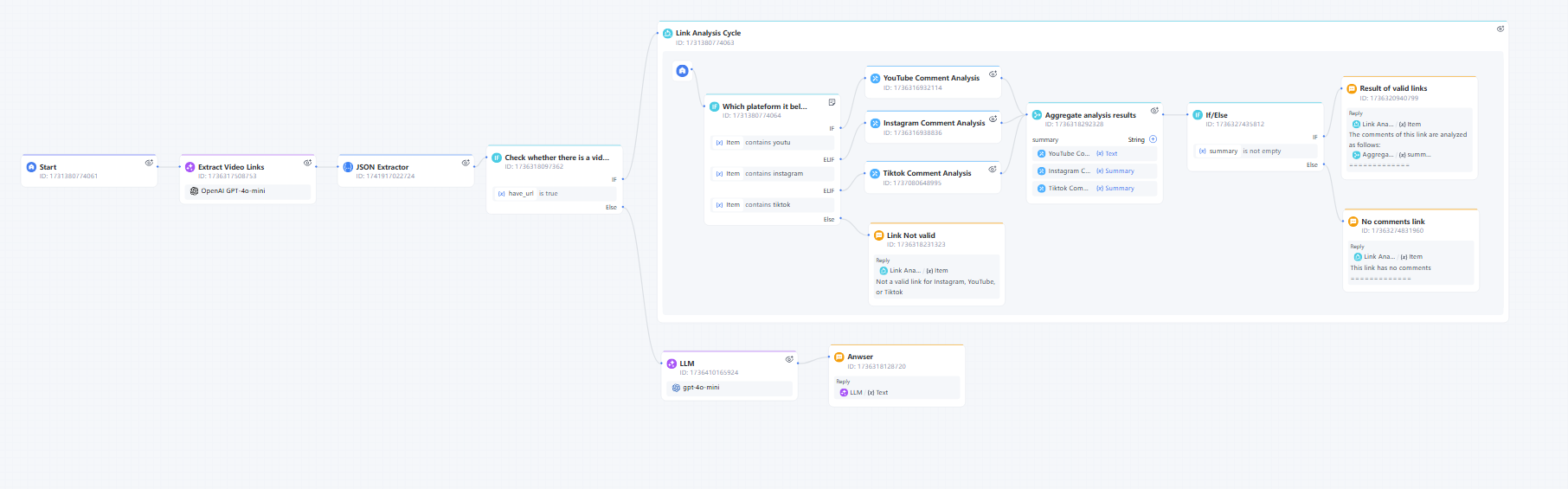

Pro Tip: Engineering CoT into Scalable Workflows with GoInsight

You've seen the power of the Chain-of-Thought (CoT) mindset in solving complex problems. However, if you have to manually write and manage these tedious, multi-step prompts every single time you use the technology at scale, it becomes inefficient and unsustainable.

Imagine the boost in efficiency if you could transform your CoT thought process into an automated, repeatable workflow, like building with simple blocks.

GoInsight's Workflow Builder is the CoT engineering tool built for you. It visualizes multi-step reasoning as drag-and-drop nodes, allowing you to easily construct and manage complex AI processes.

Conclusion

Chain of Thought prompting transforms AI from a silent, enigmatic black box to a transparent reasoning partner. It gives you a clear look into the steps the model took to arrive at the answer, ensuring users are clear on the process and can trust the output.

However, at its current stage, CoT prompting is not without its downfalls. But it can still be very effective, and will likely be a cornerstone of how we interact with AI in the future.

Leave a Reply.